New crawl options — crawl password-protected sites, custom robots.txt, custom user agent, and more!

Dragon Metrics Site Auditor crawls your site to find over 70 common SEO and user exerperience issues that are affecting your site. It’s an incredibly powerful tool, but until now it was only available to be run on a site once it’s live.

Surely it would be better to find and fix these issues before visitors and search engines do, right?

That’s exactly what today’s new feature launch makes possible. Now, no matter how you’ve locked down your test or staging environment, Dragon Metrics can still crawl it provide a complete in-depth analysis just as if the site were publicly available.

Let’s take a quick look at all the new crawl options available:

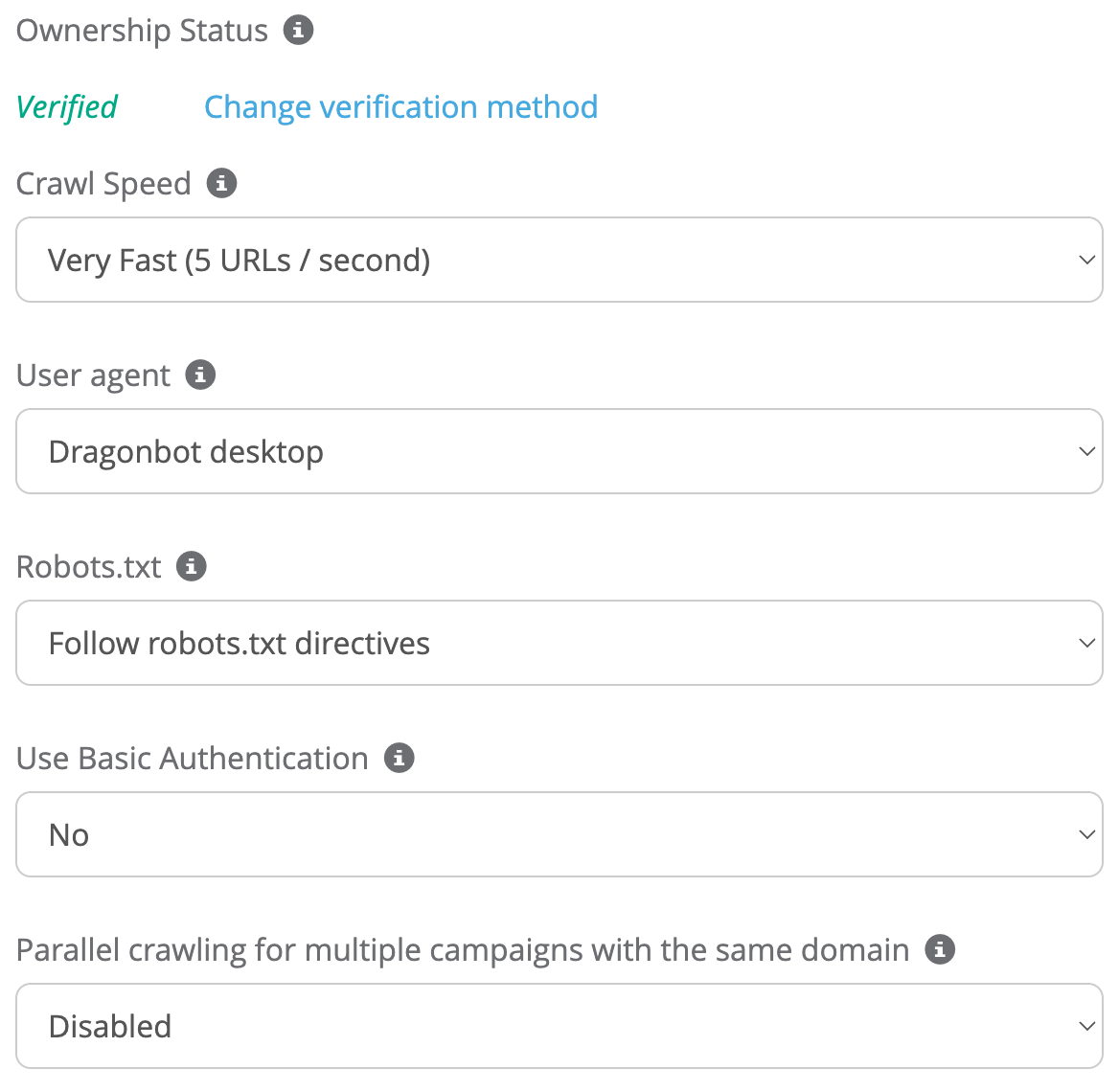

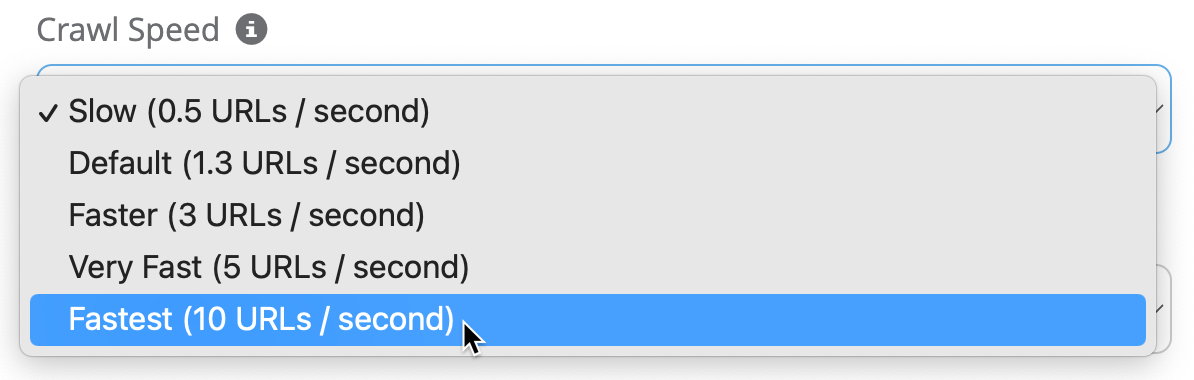

We all want our data faster, right? With crawling this comes as a double-edged sword. Crawl too fast and risk overloading the server or getting errors, but crawl too slow and you’re waiting forever for your crawl to complete. This is especially true of very large sites — crawling 1 URL / second will take 3.5 days to finish in a best-case scenario.

That’s why we’re introducing even more crawling speed options, including an ultra-fast 10 URLs / second.

Crawling this fast can get you in trouble if you’re not authorized or set up to handle this speed, so enabling this setting will require verifying site ownership (more on that below).

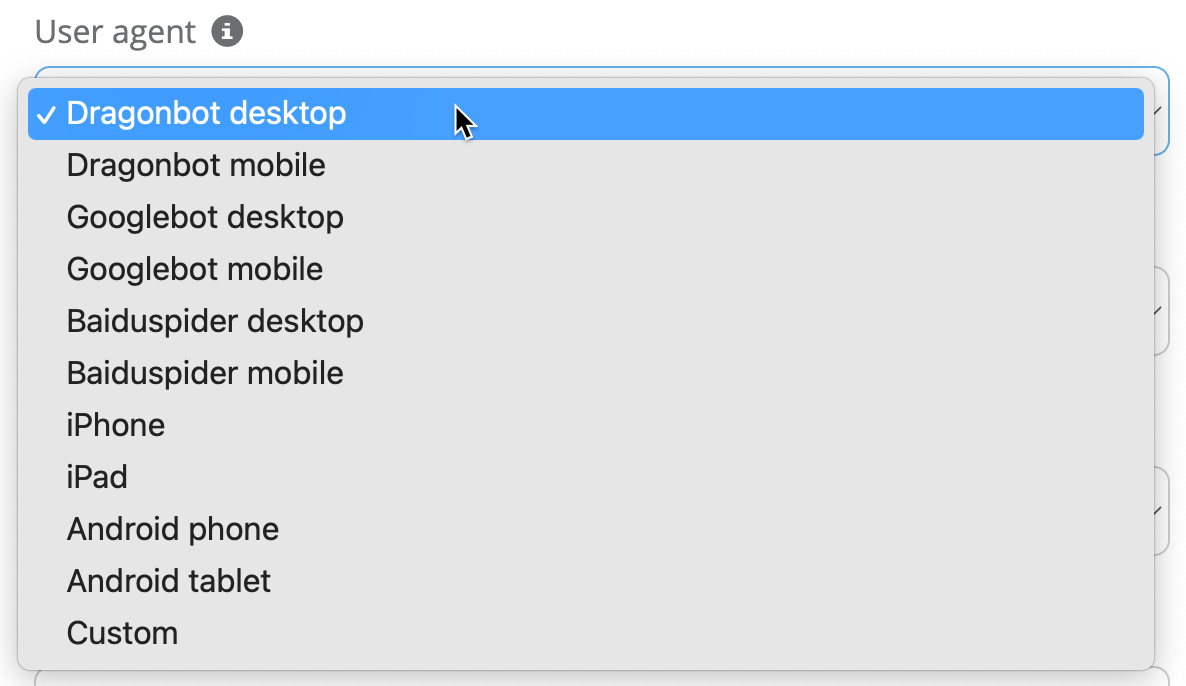

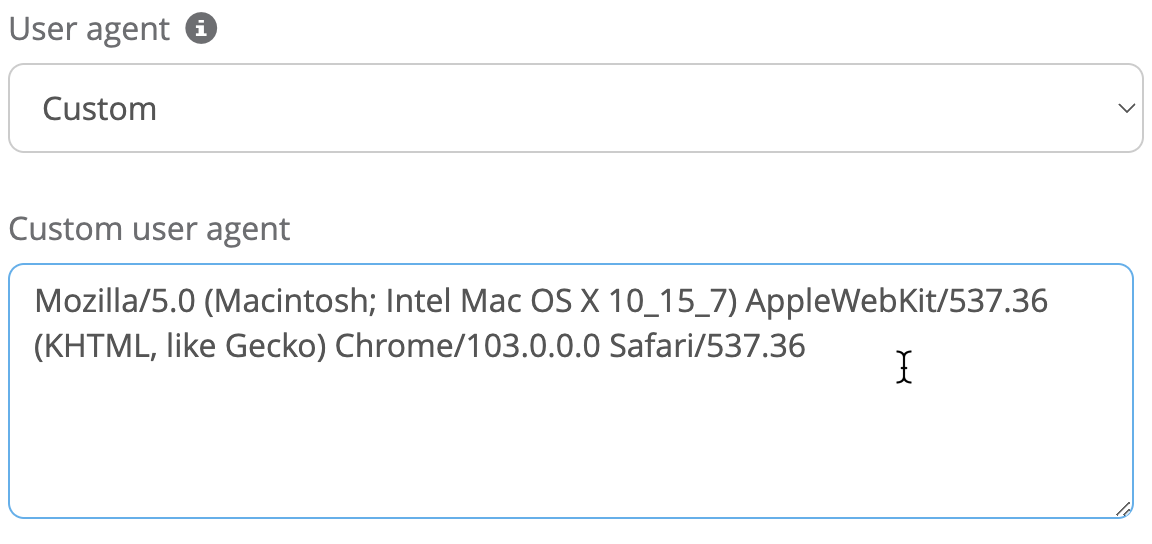

You can now choose your user agent for crawls, which opens up a whole new world of possibilities.

In addition to picking from one of the above standard options, you can use any custom UA string you’d like.

To make sure our crawler stays a “good bot”, using a custom requires verifying site ownership.

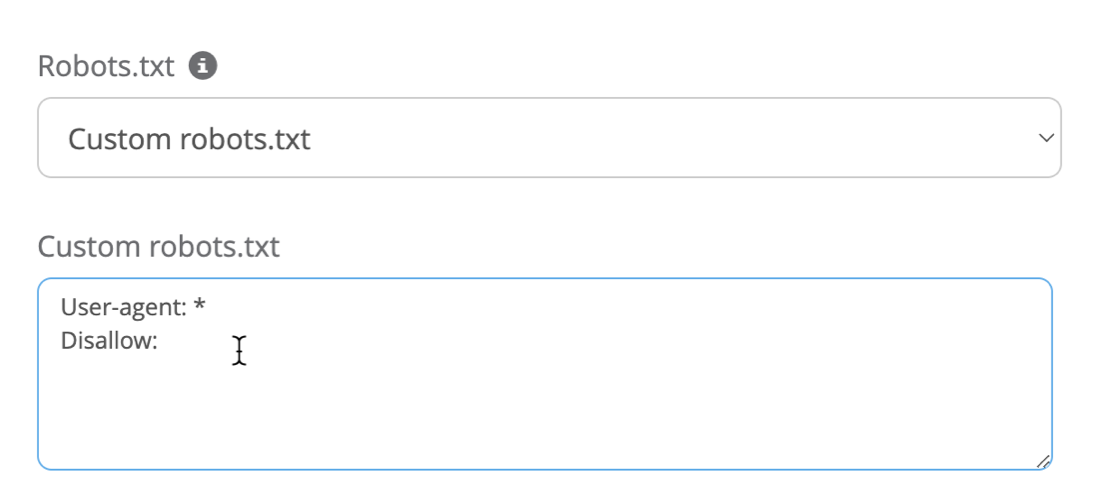

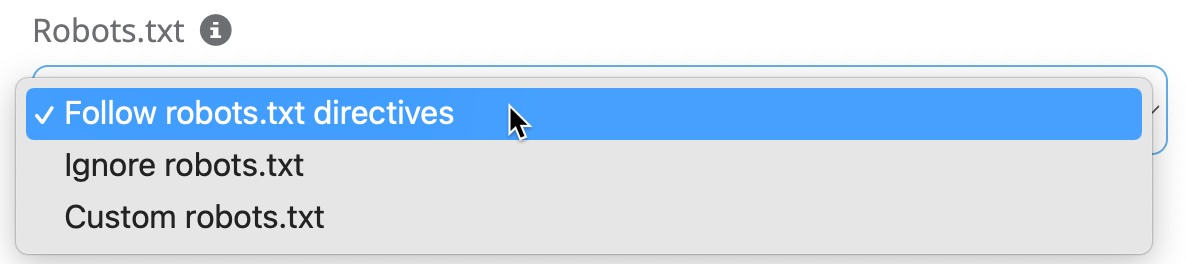

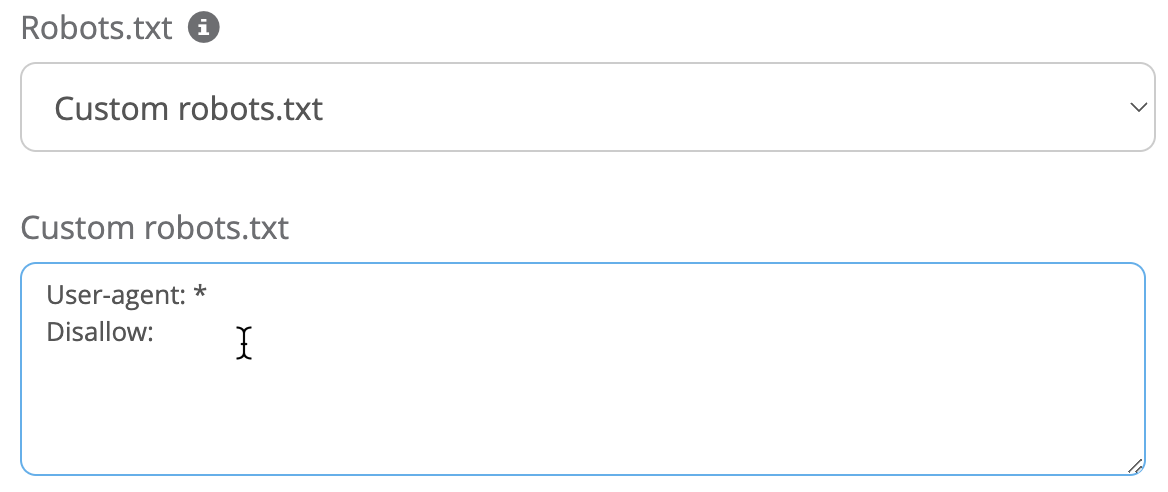

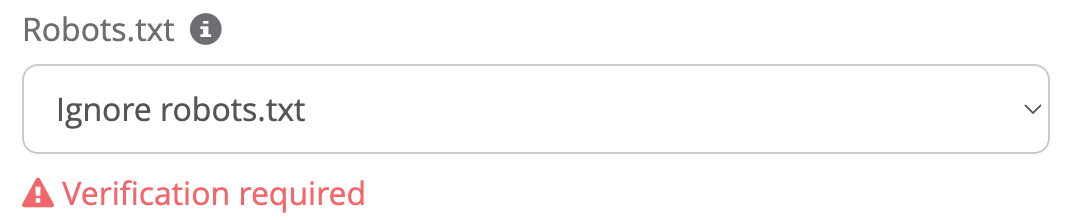

By default our crawler abides by all robots.txt settings, but you can now choose to completely ignore the robots.txt or use any custom rules you’d like!

This is perfect for crawling testing / staging environments or sites that have restrictive crawling settings.

Just like overriding the user agent string, customizing robots.txt requires verifying site ownership so our crawler isn’t used maliciously.

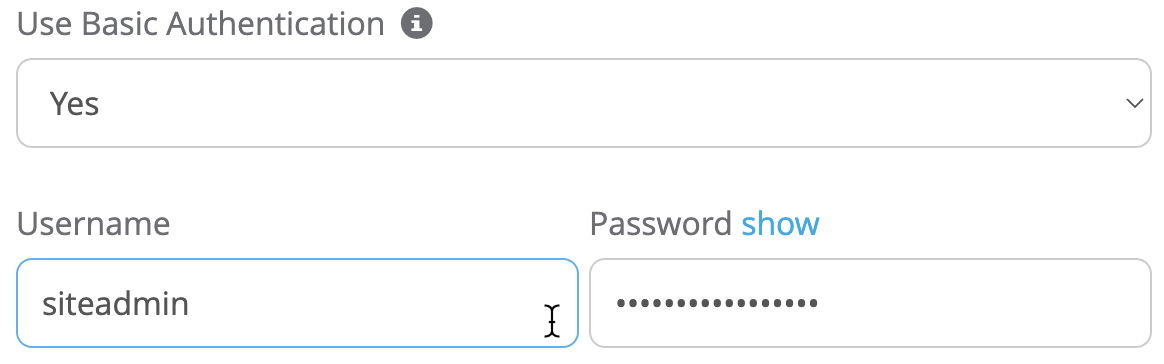

One of our most-requested features, you can now crawl sites using a username/password that use HTTP Basic Authentication. Now you can crawl the most restrictive sites before they’re launched to the public!

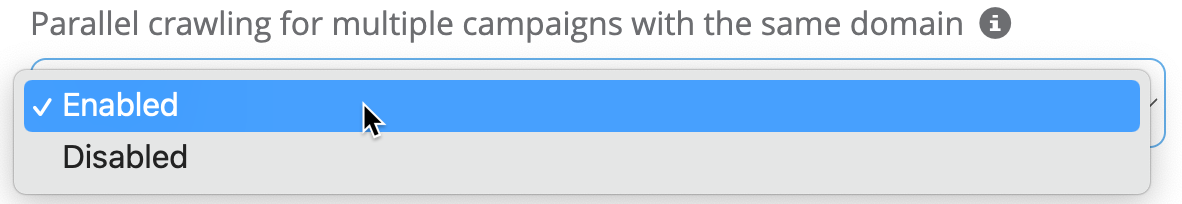

We’ve added a new setting that will help some users that are in a very specific situation see their crawls finished much faster. Most people won’t need this setting, so feel free to skip over this one as it needs a bit more explanation.

There may be times when multiple campaigns that all share the same root domain may be scheduled to be crawled at the same time.

If these crawls were to happen simultaneously, each campaign’s crawl speed will stay within the maximum crawl rate setting, but since they’re all for the same domain, the site could be overloaded with requests much higher than the expected speed.

For example:

In this case, the site owner may expect their website (example.com) to be crawled at 5 URLs / second. But since they were all created at the same time, their crawls would begin simultaneously, and the site would be crawled at 30 URLs / second, potentially overloading the server.

For this reason, Dragonbot will only crawl a maximum of 1 campaign with the same root domain at one time. This will ensure that sites will not be overloaded with a crawl rate faster than expected, but can sometimes lead to crawls being finished slowly.

If you wish these sites to be crawled at the same time, you can enable parallel crawling for these campaigns.

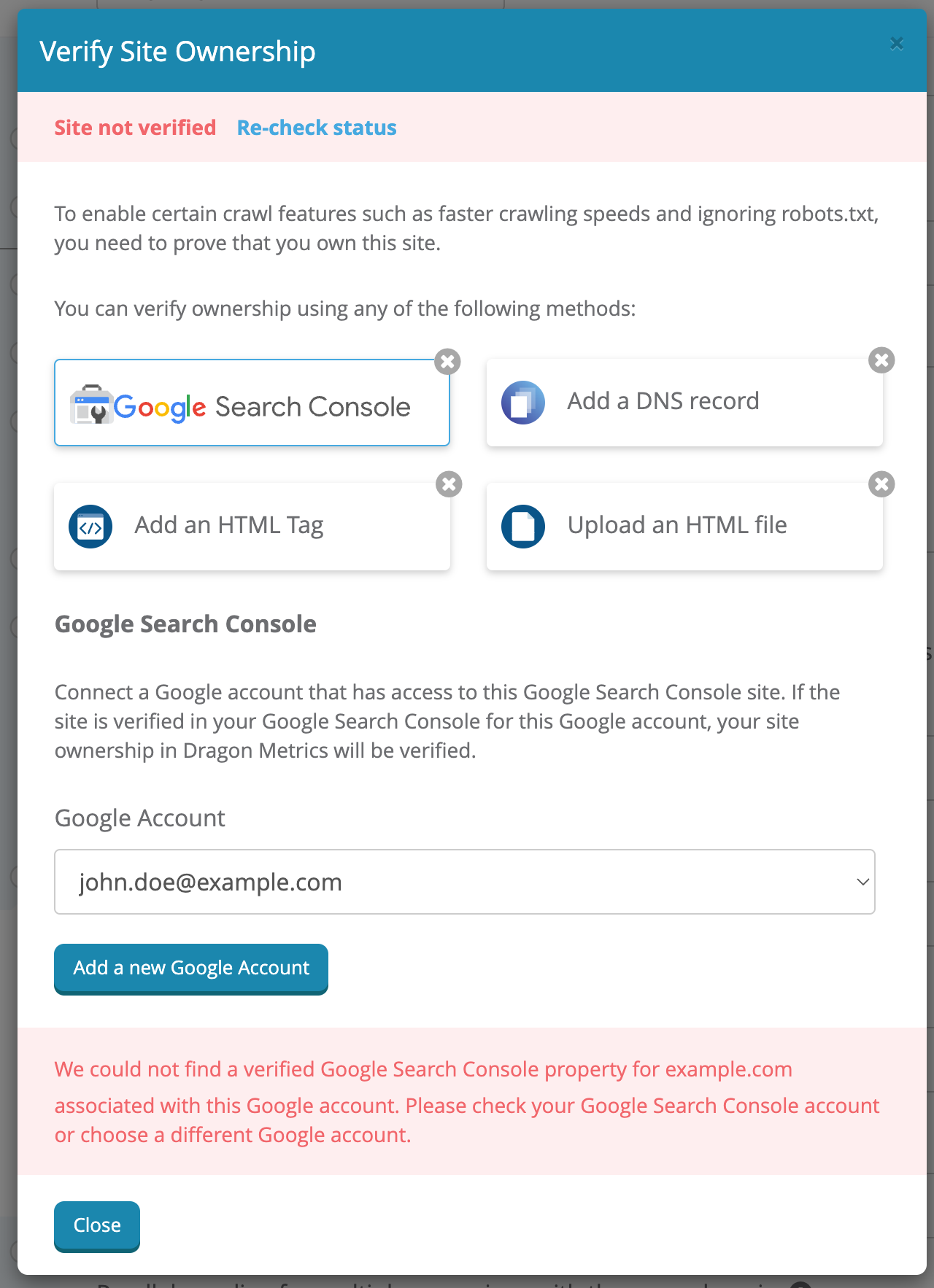

Since some of these new settings could be abused or make Dragonbot behave as a “bad bot”, enabling them requires verifying site ownership.

This is a way to prove to Dragon Metrics that you own/manage the site and that you’re verifying that you’re authorized to enable these advanced options.

Verifying your site is relatively easy. If you have access to Google Search Console for this site and have already integrated it with this campaign, verification will happen automatically.

If not, you can also verify by uploading an HTML file to the server, adding a tag to the home page, or adding a DNS record.

Once verification is complete, you’re good to go!

We’re so excited to help site owners find and fix issues before they’re live, finish crawls faster, see mobile crawls, and more with this update. Give them a try and let us know what you think!